Managing AI-related risk is commonly cited as a top AI adoption challenge by organizations across a wide variety of industries that are trying to achieve Responsible AI. Responsible AI is no longer just a “nice to have” but a key driver of AI adoption.

Dataiku and Deloitte have been developing strategies to help companies build compelling Responsible AI frameworks, and recently Dataiku hosted a panel featuring Andrew Muir, National Lead Partner for AI and Automation Offerings at Deloitte, and Michael Vinelli, Senior Manager of Strategy/Omnia AI at Deloitte, for a Dataiku Product Days session to discuss the topic. This blog will hit the highlights of the conversation!

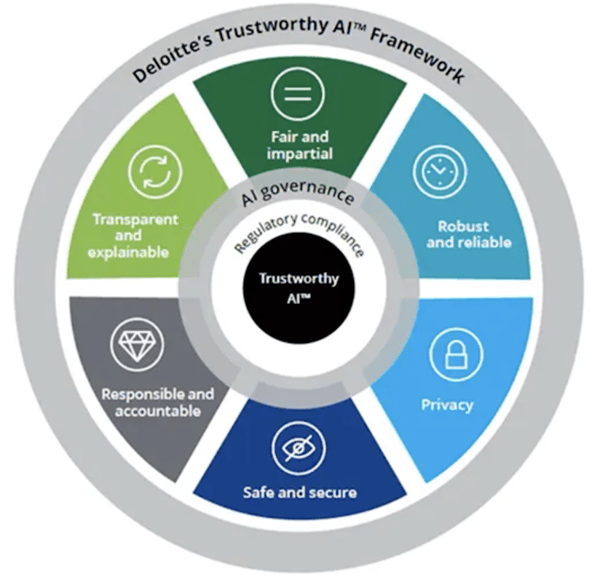

The Wheel of Trustworthy AI

In Deloitte’s Trustworthy AI framework, Responsible (and accountable) AI is one of six key components necessary to be able to trust AI outputs. Drawing upon learnings from working with clients in a variety of industries that have experienced pitfalls and failures in the realm of Responsible AI, Deloitte has developed a trustworthy AI framework that is designed to guide clients in managing AI risks and moving towards their responsibility goals. Note that: Responsible AI, while slightly different in what it encompasses, is a key component of Trustworthy AI in organizations, and understanding the full picture of Trustworthy AI helps us to perceive the need for Responsible AI in business values.

“We put trust at the center of everything we do. We use a multidimensional AI framework to help organizations develop ethical safeguards across six key dimensions — a crucial step in managing the risks and capitalizing on the returns associated with artificial intelligence." - Trustworthy AI, Deloitte

Responsible and Accountable AI

For responsibility and accountability, we are starting to make more careful decisions about human oversight. We are deciding if we are going to have more humans in-the-loop, over-the-loop systems, or even out-of-the-loop systems (autonomous systems) in place. In addition to these choices, we will need to choose a set of cross-functional stakeholders to evaluate AI applications.

Responsible AI teams can not work in silos from data science teams. A data team can take a neutral but leading role, but cross-functional collaboration is what will ultimately ensure the success of your Responsible AI efforts.

Dataiku for example features a complete AI governance framework that enables diverse stakeholders like data scientists, risk managers, and business leaders to inform decisions about how to govern and oversee each AI project. With Dataiku Govern, companies can explicitly assign responsibility for reviews and sign-offs at every step of the model build, and it features a centralized monitoring capability and integrated MLOps to close the governance loop after models are deployed into production.

Deloitte’s Take on Transparency and Explainability

These are two related but distinct concepts. Transparency is about what we owe the customer in relation to the output of the system, or how we can benefit the end user by providing additional information. The clarity that is offered to the end-user or customer when they interact with an AI system and an explanation of how a decision was reached are both core to the concept of transparency. To provide this level of transparency, model results must be interpretable and easily explained in simple language.

Explainability also speaks to our confidence level that the AI system is acting in the way that we expect it to. Concerned with model architecture, explainability shows us how our decisions are reached in a more technical way. Interpretability and the ability to understand the functions unique to the particular models of the AI system come into play here.

In different situations, there might be different levels of tolerance. This means that the higher our expectation for transparency is - such as in highly regulated industries - the more likely it is that there will be a lower tolerance for less inherently explainable models.

In an ML platform, explainability spans the entire project lifecycle from data prep through deployment and in production. Dataiku features core capabilities for documenting and explaining models, including key features such as individual explanations and counterfactuals.

Ensuring Model Fairness

Today, more than ever, we apply increased scrutiny to the impartiality of models and investigate more closely the levels of bias that will impact the fairness of our AI systems. Luckily, tools and techniques to assess model features provide us with qualitative and quantitative analysis to check for these levels of fairness. The task is then to employ these tools and techniques properly and consistently. Dataiku features a model fairness report with the most common metrics, including demographic parity, equality of opportunity, equalized odds, and more to determine whether a model is biased against a particular group or fails to make accurate predictions for a group.

AI Robustness and Reliability

In regards to robustness and reliability, we continue to deploy AI systems to more and more decisions and interactions, and, with that, comes a demand to make sure that systems are performing as we intend them to. Third-party data can be a red hot risk area historically, and organizations will need to determine to what degree they are willing to take responsibility for the actions of third parties that they work with.

To ensure models are robust enough to withstand stressors in live production environments, Dataiku offers tools to evaluate models, including diagnostics, what-if analysis, ML assertions, and stress testing.

Addressing AI Privacy Concerns

In terms of privacy, it’s no secret that there is a higher demand for personal data than ever. This involves systems that “think” with data as well as ones that act/deploy through leveraging data insights. As these processes intensify and move across different jurisdictions, privacy concerns are naturally increasing. Organizations have to take time to consider how their own use of data is abiding by regulatory requirements, guidance, and privacy legislation moving forward.

Keeping AI and Customer Data Safe and Secure

For security, we’re seeing increased concern and regulatory scrutiny over external actors' ability to manipulate and or reverse engineer AI systems. Organizations must stay alert. ML platforms need to leverage best practices and achieve industry certifications such as ISO 27001 to ensure against cybersecurity threats and protect and govern customer data.

Balancing Governance and Innovation

Risks are inherent, so choosing the right level of control to mitigate the risk is critical. It’s about finding a balance between the need to innovate quickly and the need to govern and control AI. Remember: there is not one right answer or one-size-fits-all solution when it comes to AI Governance. The AI Governance framework must reflect that building AI is a flexible and experiment-driven journey. At the end of the day, AI Governance should be an accelerator for AI applications and not a roadblock.

Managing Deployed Models

Deployment is where the risk that was present in the development and production stages of the model life cycle becomes concrete. This stage is where we witness how unintended impacts land organizations in negative news headlines. Trustworthy AI must extend beyond production and into deployment and user interaction. As the model interacts with end consumers, model monitoring becomes critical for keeping model risk under control. Ensuring the quality of model outputs, defining checkpoints, and evaluating the effectiveness of models in particular environments are all critical parts of model monitoring and observability to promote trustworthy AI. To craft the most effective strategy to generate Trustworthy and Responsible AI through model deployment, enterprises need a governance framework and a platform that enables each of the six components of the Trustworthy AI framework.